For anyone shipping software in regulated industries, the word “control” gets thrown around all over. Compliance frameworks demand controls, auditors verify controls are used, engineering teams implement controls, and there are even Control Owners. But what exactly is a control? And more importantly, how do we design controls that actually serve their intended purpose while enabling rather than hindering delivery velocity?

Here at Kosli, I’ve spent a lot of time with enterprises understanding their complex compliance requirements. The challenge I see when it comes to automating a control is really understanding what the collection of manual processes, spreadsheets and screenshots are for and how they are supposed to provide the evidence we’re mitigating risk. I’ve found this model helpful in thinking about controls.

Controls: the basics

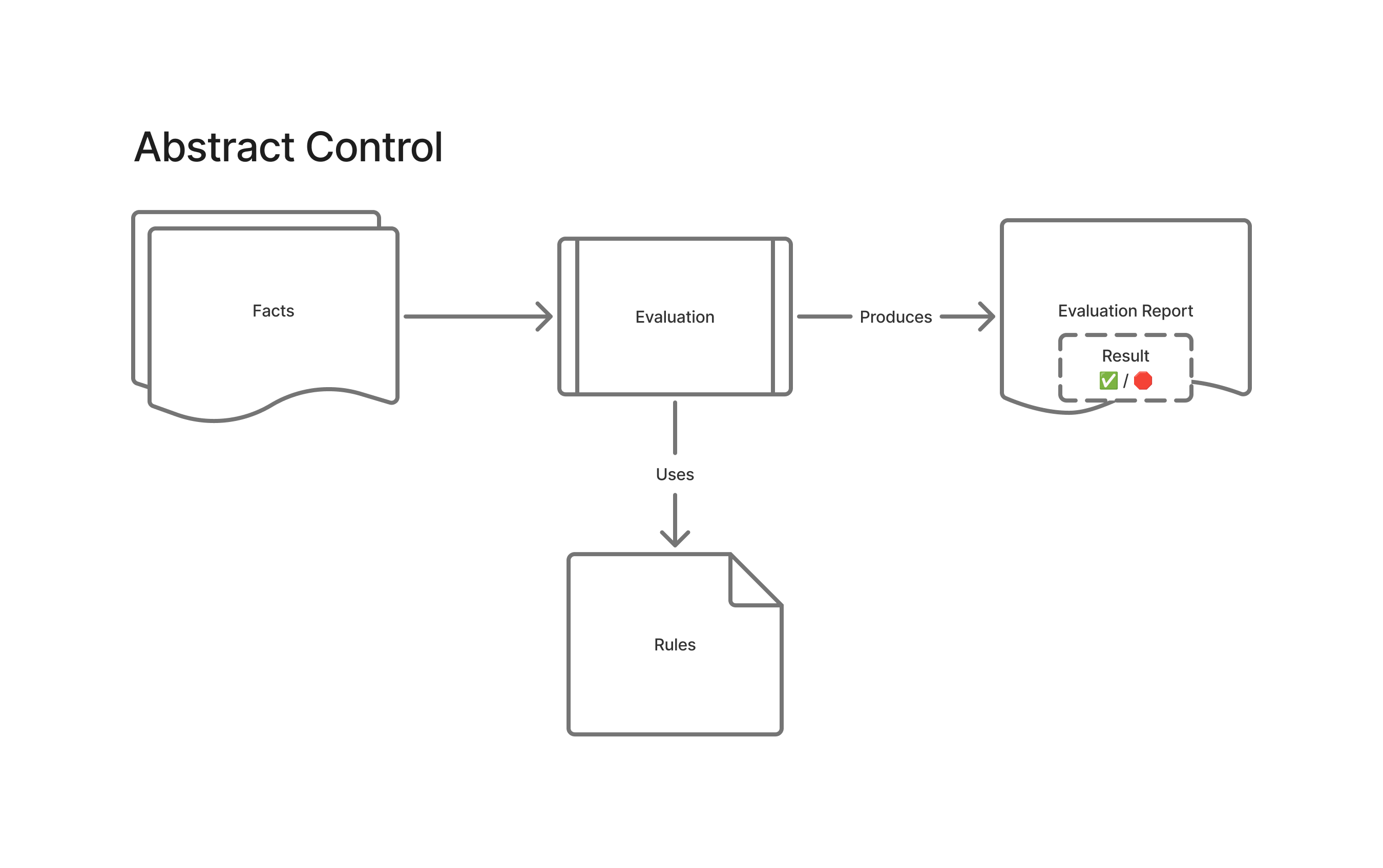

At their most abstract level, every control, whether manual or automated, shares the same fundamental components:

The key elements are:

- Facts: The raw data or evidence being evaluated

- Rules: The criteria used to assess those facts

- Evaluation: The process of applying rules to facts

- Evaluation Report: Documentation of the decision and its rationale

It seems obvious when you look at it, do these facts meet our criteria? However, for any control to be effective in a regulated environment, you need to be able to trace back through the decision-making process. Which facts were considered? What rules were applied? How was the evaluation conducted? That’s where the Evaluation Report is essential, and is often what a lot of the manual documentation needed to close controls comes from.

Think of it like showing your workings-out in a maths exam. The answer matters, but the workings are what prove you understand the process and can be trusted to get it right consistently.

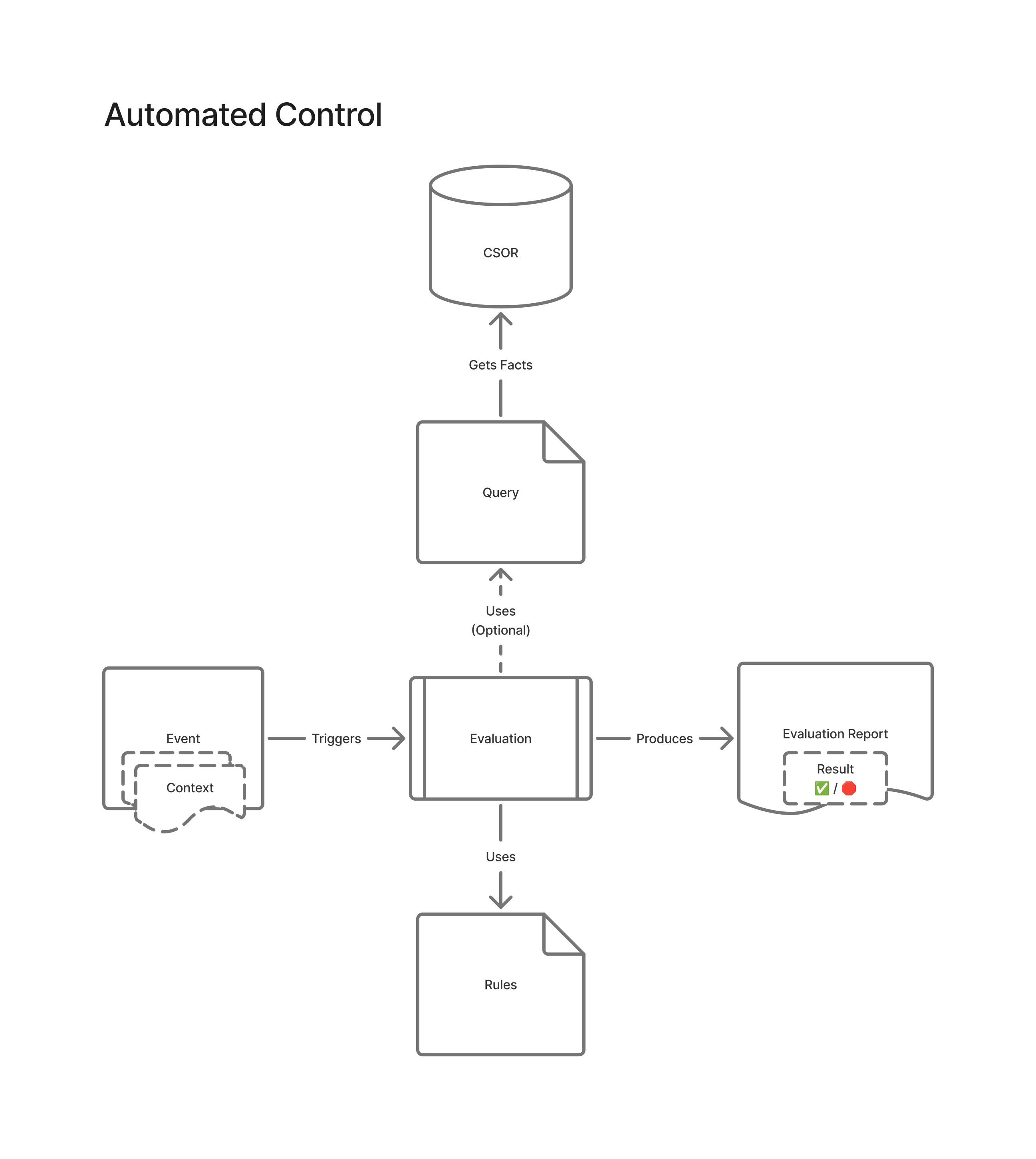

Making Controls Automated and Event-Driven

When it comes to automating controls, we don’t want people to have to go and find the right data, take screen shots, and put it all together into a spreadsheet. The control needs to understand how to do all of that.

In an automated control system, we can be more precise about the flow:

- Event: Something happens (a build completes, a deployment starts, a schedule triggers)

- Context: Specific contextual information about the event (git commit, artifact ID, repository, test results, etc.)

- Query (Optional): The system can proactively gather the additional facts it needs from a Compliance System of Record (CSOR), using the event context

- Rules: The same evaluation criteria, but now machine-readable and consistently applied

- Evaluation Report: Automatically generated documentation with full traceability

For an automated Evaluation Report to provide full traceability, it must capture four essential elements:

- Context: The specific circumstances that triggered the evaluation – which event occurred, when it happened, and what entity (artifact, deployment, repository) it relates to

- Facts: The actual data gathered and evaluated – test results, approval records, scan outputs, or whatever evidence was collected from the CSOR or event payload

- Rules: The criteria that were applied – what conditions needed to be met, what thresholds were set, what policies were in force at the time of evaluation

- Result: The outcome of applying the rules to the facts – pass/fail determination, any exceptions or warnings, and the rationale connecting the evidence to the decision

This structure ensures that anyone reviewing the report later, whether an auditor, a compliance officer, or a future engineer, can reconstruct exactly why a particular decision was made at a particular point in time.

This ensures that automated controls can be both more rigorous and more efficient than manual ones. Every evaluation is documented, every decision is traceable, and the criteria are applied consistently across all events.

Why Design Matters

This isn’t just theoretical architecture, it has practical implications for how we build governance systems:

Auditability by Design: When controls are structured this way, audit preparation becomes dramatically simpler. Instead of scrambling to reconstruct decision-making processes months after the fact, you have complete trails for every evaluation.

Consistency at Scale: Manual controls suffer from human variability—different people applying the same rules can reach different conclusions. Automated controls eliminate this variability while maintaining the flexibility to evolve rules as requirements change.

Context-Aware Decision Making: By connecting events to broader system context through queries against your CSOR, automated controls can make more informed decisions than isolated manual checks.

Continuous Compliance: Rather than point-in-time assessments, this model enables continuous evaluation of compliance posture, catching issues earlier and reducing the risk of compliance drift.

The enterprises that will thrive in increasingly regulated environments are those that move beyond thinking about compliance as a series of checkboxes and start designing it as an integrated part of their delivery systems. Controls aren’t obstacles to velocity. When properly designed, they’re the foundation that makes rapid, confident delivery possible.