Imagine for a moment that agentic coding tools really do deliver on their promise. Code is written faster, tests are generated automatically, and refactors that once took days now take minutes. On paper, software delivery should accelerate dramatically.

Now imagine you work in a regulated enterprise. The code is ready, but production is still days or weeks away. There are forms to complete, screenshots to gather, evidence to assemble, tickets to update, change records to submit, CABs to attend, and people to wait for. The bottleneck hasn’t moved.

When Speed Doesn’t Matter

Let’s put some numbers on it. If your end-to-end lead time is six weeks and coding takes one day, then even a 50% improvement in coding speed saves you half a day. The other five weeks and six and a half days remain completely untouched.

This is the uncomfortable reality in regulated environments: coding speed is rarely the constraint. The constraint sits downstream, where software must prove it is safe, compliant, approved, and auditable before it can reach production.

Faster In, Bigger Batches Out

There’s a second-order effect that often goes unnoticed. If the change process stays the same but teams can now generate more code in the same amount of time, they won’t send changes through individually — they’ll batch them.

This isn’t reckless behaviour; it’s rational. When every change incurs the same fixed overhead, teams respond by putting more into each release. Over time, changes grow larger, harder to reason about, and riskier to roll back. Speed increases at the front of the pipeline, while risk accumulates at the end.

This pattern is well understood in queueing theory. As described in a practical analysis of Little’s Law applied to ready-for-testing queues, when upstream work moves faster than downstream capacity, work doesn’t flow — it accumulates. Cycle time increases not because people are slow, but because items sit waiting in front of the bottleneck. Teams can feel productive pulling more work, while the system as a whole becomes less responsive.

Agentic coding tools accelerate exactly this dynamic. They increase the rate at which work arrives at a downstream process — compliance, approvals, evidence collection — that hasn’t fundamentally changed.

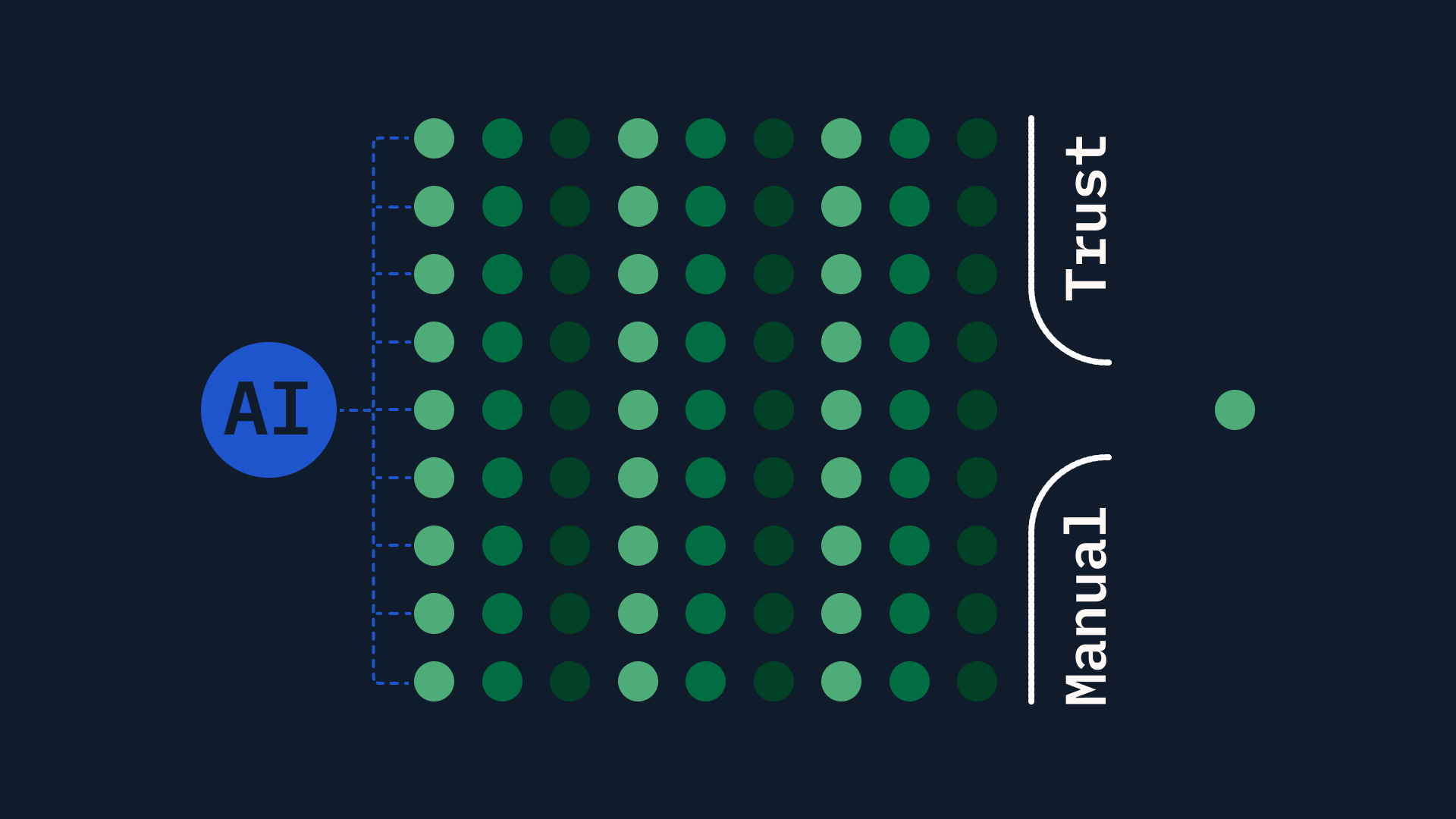

The Trust Layer Becomes the Bottleneck

What’s really happening is that the trust layer hasn’t evolved at the same pace as development. Because delivery systems don’t share lineage, evidence, or control results, trust is still created manually — through forms, screenshots, spreadsheets, ServiceNow updates, and human sign-off.

Every change pays the same recurring cost to pass through this layer — the velocity tax. As throughput increases, that cost becomes more visible and more painful, accumulating into velocity debt over time. Agentic coding doesn’t remove this friction; it simply sends more work into it.

The Uncomfortable Conclusion

Now imagine you work in a regulated environment and it’s still going to be days or weeks until code that’s ready gets anywhere near production. There is still paperwork to complete, evidence to gather, systems to update, and people to wait for.

Look at it this way: if your lead time is six weeks and coding takes one day, making coding 50% faster saves you half a day. The other five weeks and six and a half days remain untouched.

Not only that, it actively encourages bigger batches. If the change process stays the same and teams are writing more code, they’ll put more change through the system each time. This is already being seen. The same DORA report shows that for every 25% increase in AI adoption, delivery stability drops by 7.2%.

Code faster. Bigger batches. Worse systems.

For large enterprises operating under heavy regulation, it’s difficult to justify investing heavily in agentic coding tools without also — or even before — investing in automating evidence collection, embedding compliance into CI/CD, and implementing policy-as-code.

Otherwise, you’re not removing the bottleneck. You’re just hitting it harder.